How To Make a Music Video with Artificial Intelligence

Here's how to create AI music videos in any style, to match the vibe of your songs. Just upload a track, come up with a visual theme, and let neural frames handle the rest.

The launch of MTV and VH1 in the '80s changed the way people experienced music forever. Did videos kill the radio star or make them even more famous? Fans could finally see their favorite artists come to life, without waiting all year for a live concert. Music videos revealed levels of depth and personality that were previously left up to the imagination.

In these early days, record labels controlled production and distribution. This helped stakeholders to control the public’s perception of an artist. But the facade began to fall apart in 2005, when YouTube leveled the playing field. Suddenly there was a platform where everyday creators and independent artists could share their vision with the world, free from the dictates of a label.

It's been nearly two decades since YouTube launched and became the preferred destination for watching music videos. Streaming platforms have disrupted artist revenue and indie musicians have less budget to create elaborate content. That's one of the main reasons that people are learning how to make a music video with artificial intelligence.

AI text-to-video generators like Neural Frames, Runway, and Pika are now providing interfaces to support control over camera angles and image modulation based on musical parameters.

3 Popular AI Music Video Generation Apps

In this article we'll provide an overview of how to create music videos with AI. Before we jump into a tutorial, let's have a look at three of the most popular solutions available currently.

Neural Frames

Neural Frames is the most accessible solution for making AI music videos. Unlike Pika and Runway, anyone can sign up and start using the service immediately without joining a waitlist. The platform uses text-to-video prompts with frame-by-frame animations using Stable Diffusion.

Users have access to several models, each with their own array of visual styles to draw from. Create photorealistic and cinematic imagery or trippy animations with graphics processing that’s 3 times faster than competitors.

Premium subscriptions comes with 4K resolution, more custom models, and unlimited video generation length. At 25 frames per second, the final video content will be 40% more fluid than other text-to-video generators.

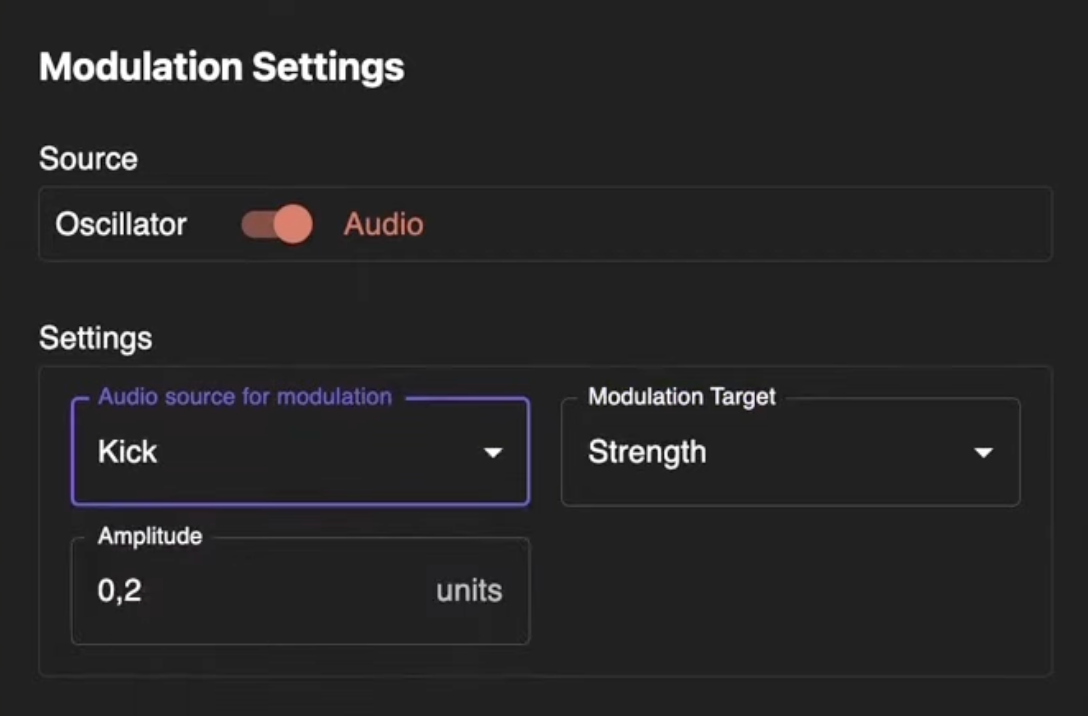

Neural Frames caters to music videos specifically with AI-powered audio stem-separation. Select an instrument from your track that has prominent audio transients, like a kick drum or snare. Then set up modulations that rotate, zoom or even phase-shift the imagery whenever that impact takes place. In this way, your music and videos will stay closely synchronized with one another.

Runway and Pika

In 2018, Runway emerged as an exciting new AI platform that utilized cutting-edge technology to transform images, video clips, and text prompts into interactive videos. Its all-in-one video creation and editing system eliminated the necessity for conventional video production techniques. With 30+ advanced AI tools, Runway empowers users to produce lifelike, seamless graphics and 3D videos in various styles and formats.

A second company called Pika Labs launched in 2023 and similarly aims to create realistic videos from text, images, and other videos. Pika lets users control camera movement with simple commands like "pan right," "zoom in," and "rotate clockwise” to make videos more cinematic. It also offers a default frame rate of 24 frames per second, matching the industry standard for film production.

However, both Runway and Pika have some accessibility limitations. Runway costs 5 cents per minute of computation, which adds up quickly when you're trying to render enough content for a full music video. Pika is still in beta, so users to sign up and wait for access (which for some people has apparently never come).

These platforms also have a limited range of motion in their short film output. One TechCrunch reviewer says Runway’s frame rate of four-second-long videos is low and looks “slideshow-like in places.” While some consider the motion produced by Pika superior to Runway, it sometimes fails to add complex or exaggerated motions.

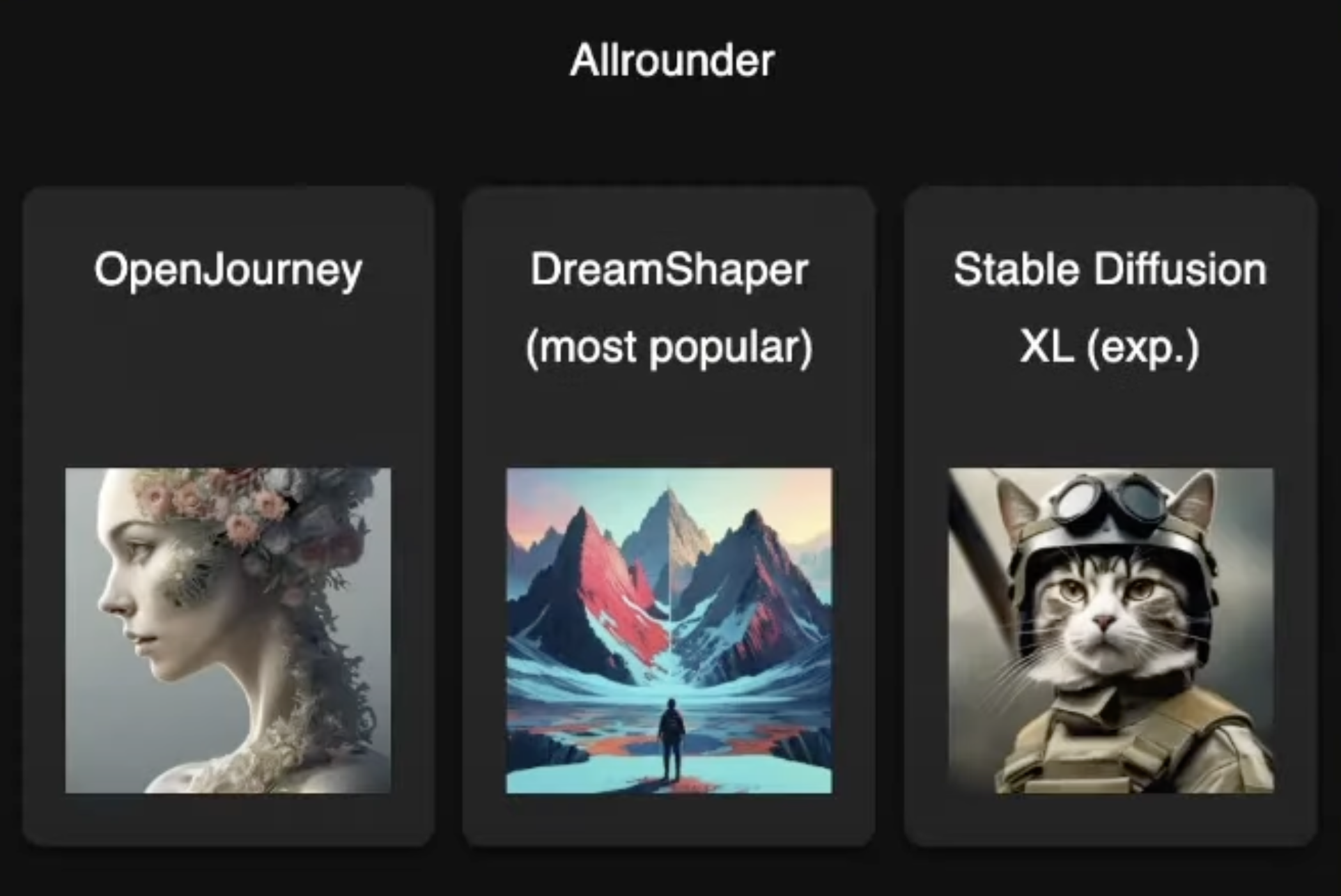

Neural Frames provides half a dozen different AI image generation models to choose from, making for a diverse range of visual possibilities.

How to Make a Music Video with Neural Frames

So now that we've covered some of the basics, are you ready to try your hand at making your first music video with artificial intelligence? We've included an illustrated walkthrough below for quick reference, but if you get lost, just refer to the tutorial video shown above.

Getting Started with Neural Frames

Sign up with your email to create an account and select the ideal plan that suits your requirements. The tiered packages - Newbie, Navigator, Knight, and Ninja - offer a range of features and customizable options. The most popular is the Neural Knight, which comes with 1080p upscaling.

Select your text-to-image model

Pick from a selection of six standardized models that generate distinct effects, categorized into two groups: The Allrounder, which includes OpenJournery, DreamShaper, and Deliberate, and The Specialists, which consists of Realistic Vision, Analog Diffusion, and Anything. There are also customizable options to train the platform using your own images.

Generate video clips from text prompts

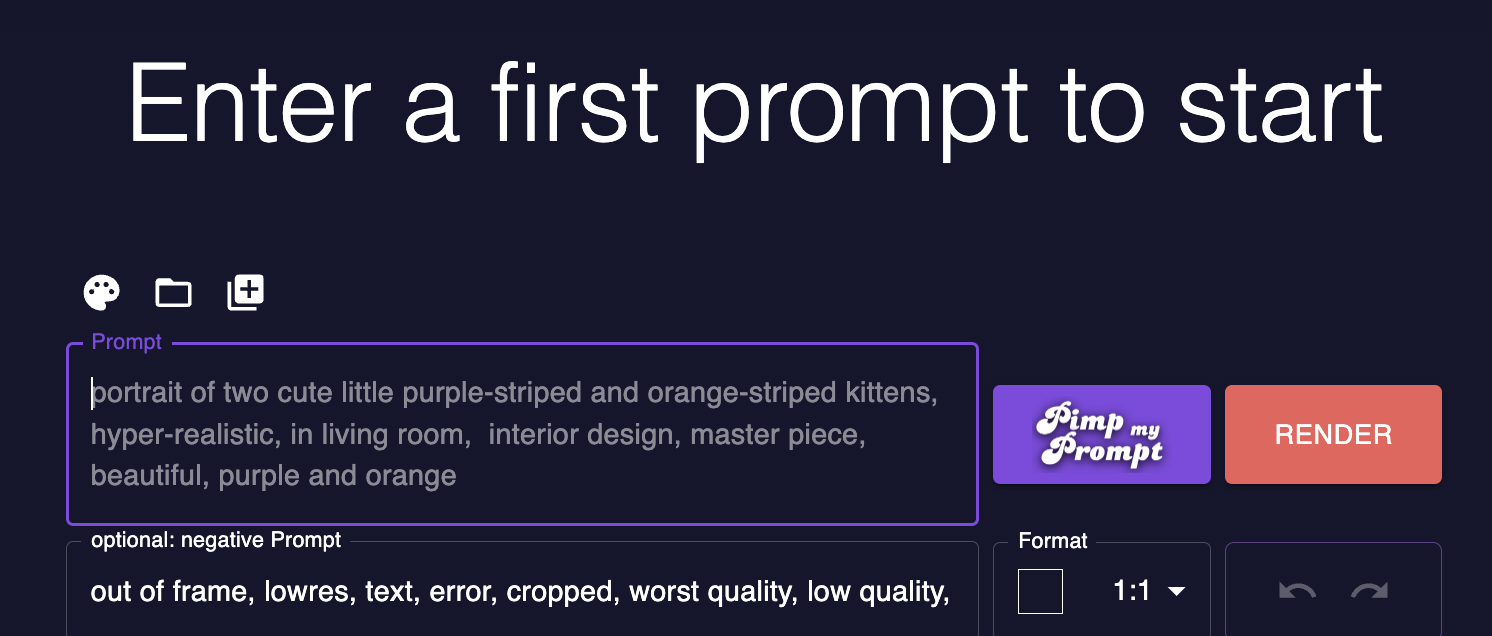

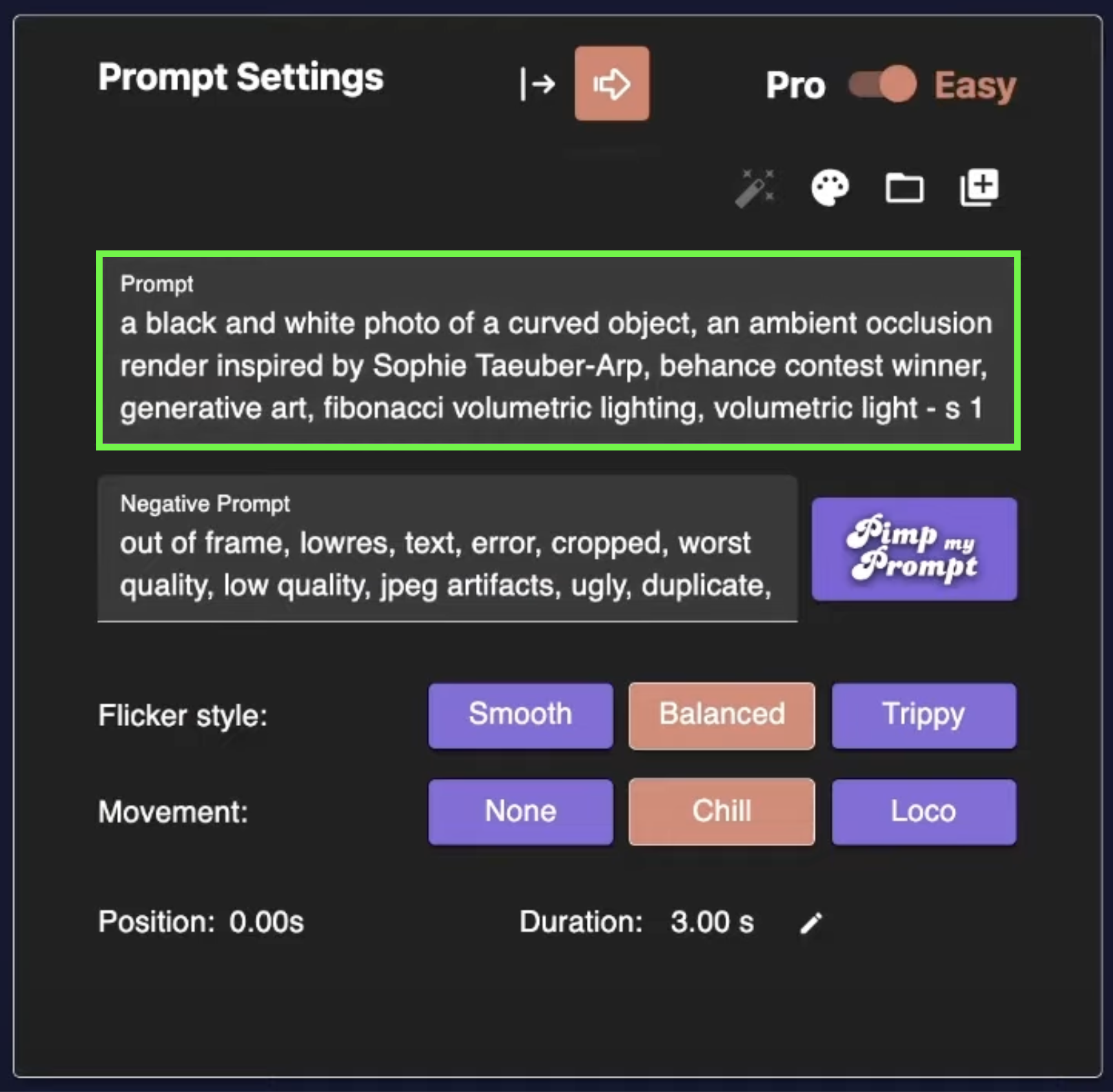

Once you have clicked on your preferred text-to-image model, click “create new” to open the First Frame Editor.

- Click on the prompt text area and describe the initial image you want.

- Having trouble coming up with a rich description of your vision? Click the Pimp My Prompt button to enhance your prompt with AI.

- Select your video format (1:1, 4:3, 3:4, 16:19, or 9:16).

- Click on “render”.

- Automatically generate four images according to your selected parameters. Click the one you like the best, and you’ll be directed to the Video Editor.

Later you'll be able to continue creating new images on the prompt settings screen.

Update: We also have a helpful tool, called the Stable Diffusion Prompt Generator, that is available for free for everybody.

Import your audio into the Video Editor dashboard

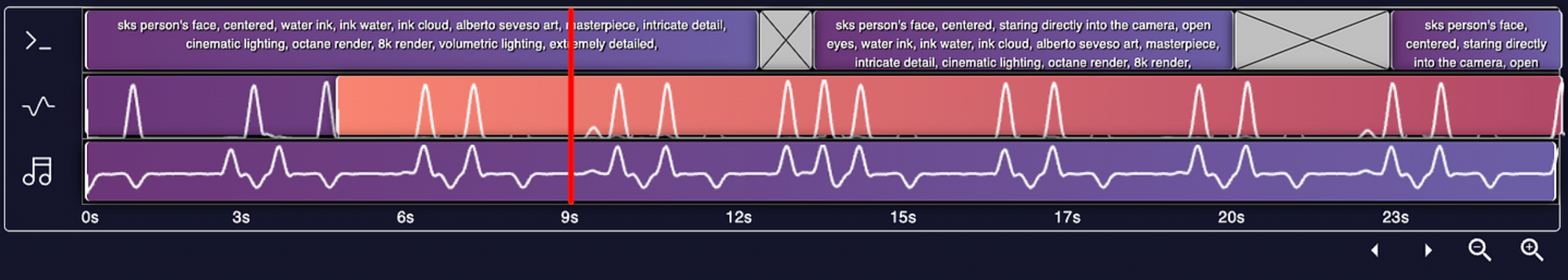

The Video Editor consists of three elements: Prompt Setting, the Preview Window, and the Timeline, divided into three tracks: video, modulation, and song.

Click on the music note icon on the Timeline’s audio track to import a song from your device. Separate the stems with a the click of a button and use the “add” button on individual tracks to include them.

Select your audio source to determine which instrument stem will cause the music video to change in the desired way.

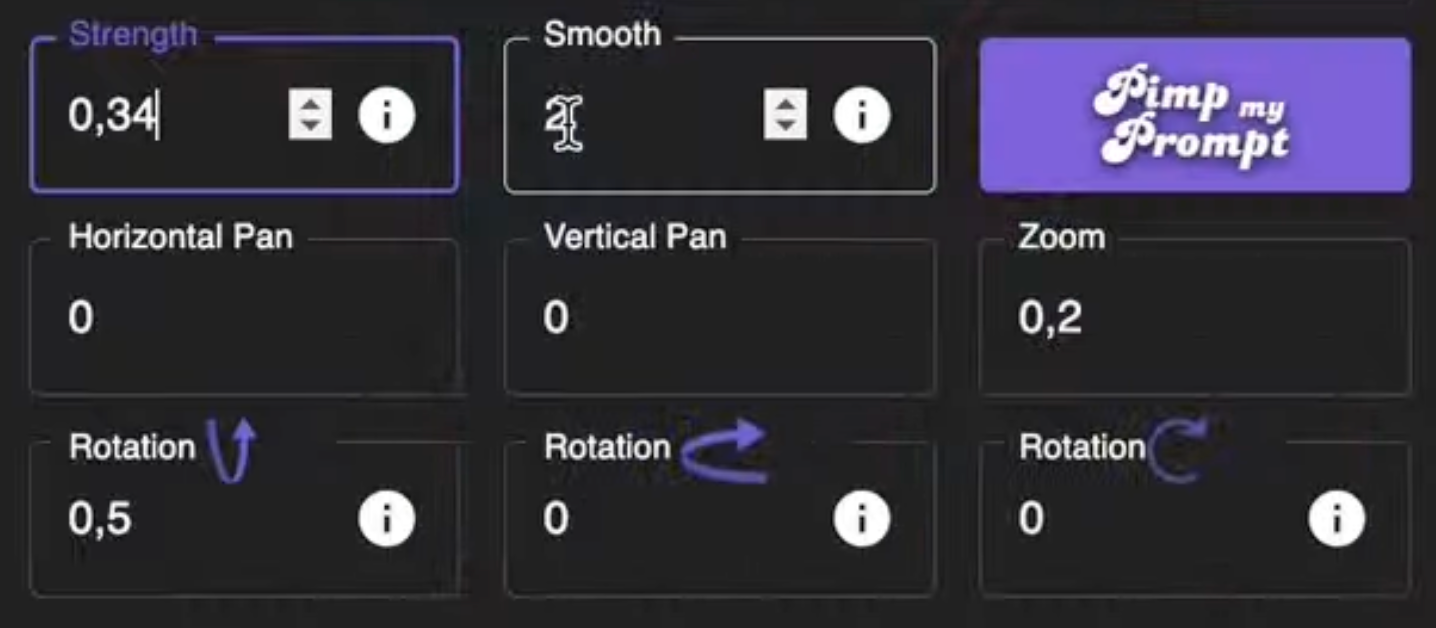

Configure modulation settings before rendering footage

- Click the add button next to the stem to add it to the modulation timeline.

- You can adjust the Strength and Smooth parameters to create different visual effects on the left-hand side.

- If you’re not in the Pro setting, we advise clicking Trippy.

- To avoid distortion, don’t change the Smooth value mid-video.

Continue generating video clips for the full song

- Click on the video timeline and type another prompt in the “text box” to add another clip.

- Continue doing so until you have generated enough footage for your video.

- To zoom in and add other effects, click on individual clips in the video timeline, then click “zoom” and adjust as needed.

Render the final video

Click on Render once you've completed your edit. You can go back at any time to change your music video until you’re happy with the results!

Creating high-quality budget music videos is so much easier now. Our stimulating animation style minimizes the need for special effects and post-production, making it an ideal solution for artists and indie filmmakers or video directors.

Traditional music video production required significant resources and expertise. AI provides a cost-effective and accessible approach that offers endless artistic possibilities for experts and beginners. Save time and resources, creating things you may never have been able to do due to budget constraints.

We offer a text-to-image generator to address the growing need for visual content without relying on stock footage. In the next section of this article, we'll explain how to make a music video using traditional techniques and highlight how it compares to an AI workflow.

How to shoot a music video (compared to AI)

Most of the traditional techniques for shooting a music video still apply to content creation on an AI video platform. In this section we’ll cover the basics like shot lists, concept development, and post-production.

1. Pre-production: Coming up with your video concept

Pre-production and concept development are the first stage of this creative process. Producers will work with their team to define the vision and map out operations before filming. Since AI-generated videos don't typically require a camera, lighting or crew, the most important step will be conceptualization. We can separate that into three parts:

- Song analysis: Listen closely to the music and let the emotions guide you through different possibilities for imagery or narrative. What is the song about and how will the video draw that out for the target audience?

- Choosing a narrative or theme: Will your video tell a linear story with traditional characters and narrative arc, or is it more poetic and experimental? This is the phase where you lock in the high-level concept of the video, before figuring out all of the details.

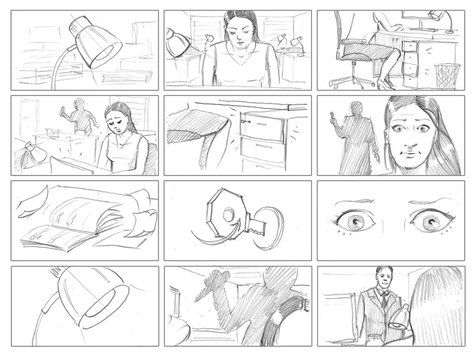

- Storyboarding: Once music video’s concept is clear, sketch out the most important shots and scenes in a storyboard. You can use an app like Dalle-3 to create the images if you don’t have a concept artist available to help you. Your text-to-image prompts can include details about the lighting and angles. Seeing the ideas laid out in a visual format will help later when it’s time to begin creating your video content.

When generating your shot list, it’s a good idea to line up ideas for b-roll footage that you can splice in between the most important moments. Footage of landscapes and ambient scenes will help set the mood for your video, even if they don’t contribute directly to the narrative.

2. Choosing your equipment and software

In a traditional video shoot, production value is closely tied to budget. Teams with more money can afford to rent or purchase superior equipment, operate on an extended filming schedule, and hire a bigger crew.

As you can imagine, making videos with artificial intelligence requires far less equipment. This is especially true when using a cloud-based service like Neural Frames. All you need is an internet connection and a web browser to get started.

Here’s a quick overview of the equipment that would have gone into making a music video:

- Video camera and lenses: Cinema cameras (e.g., ARRI Alexa, RED DSMC2, Sony CineAlta) boast higher dynamic range and resolution, while cheaper DSLR and mirrorless cameras (e.g., Sony Alpha A7 III, Blackmagic Pocket Cinema Camera 4K) have lower image quality and fewer customization options. Low budget content creators sometimes use Apple iPhones.

- Good lighting: The atmosphere of a space is greatly influenced by lighting. LED lights are an excellent choice for a vibrant and dynamic feel, while tungsten lights create a cozy and intimate ambiance. Conversely, fluorescent lights evoke a cold and sterile mood, so they would only be used when aiming for that aesthetic.

- Audio equipment: Some music videos include interludes not part of the original track, like dialogue or a moment of live performance from the band. Crew sometimes need microphones for vocals and live instruments, a mixing console, audio interfaces, cables, headphones, and a monitoring system for playback.AI Setup

If you plan on making additional change to the video footage in post-production, it will be helpful to have video editing software like Adobe Premiere Pro, Da Vinci Resolve, iMovie, or Final Cut Pro.

Artists with programming chops have also been generating AI music videos locally on their computer. There’s a high cost associated with obtaining the graphics cards and memory required to run AI video generation locally.

3. Shooting content versus generating a music video

Making music videos is a collaborative process with many moving parts. High-budget operations often have more than 300 people on set. Directors and producers will scout out a location beforehand and pay for permits or rentals to ensure they’re available on the day of the shoot.

When it’s time to start rolling the camera, skilled directors will already have their shot list and angles prepared. They work hard to build rapport with their talent to make sure they can get the performances they need, without conflict or misunderstanding.

Videographers should be skilled in cinematography (the and adept at capturing both close-ups and wide angle shots. camera angles add visual interest and keep viewers engaged.

4. Post-production: Assembling your music video

Traditional video post-production tasks like color grading and visual effects can add a lot of value to AI generated video content. Whether you’re shooting live video footage or generating it with AI, you’ll need to have a video editor like Adobe Premier to do post-production. Here’s an overview of some important things to consider.

- Video editing: To get started, drag your video and audio assets into the editing software. You’ll construct the final video on your timeline before getting into effects. Adobe Premier offers stock video templates that can help guide this process.

- Effects and transitions: Add transitions and special effects to enhance specific moments and maintain flow between shots and scenes. Traditionally this is where imagery would be swapped in for green screens.

- Syncing sound effects, music, and visuals: As we’ll explain in the tutorial, AI video generation can help you to automatically sync music and visuals through effect modulation. If band members decided to lip sync their lyrics, this is the phase where you would line that footage up with the song. You might also add text overlays for lyrics videos.

- Color grading: Even with AI video generation, post-production treatment of the color grading and tone will enhance the visual appeal of the finished content.

If you're new to creating videos and don't want to spend time learning post-production, consider using a freelancer platform like Upwork or Fiverr. You'll find affordable contractors on these sites who can help fine tune your work.

Publish to social media: With millions of active users, YouTube is one of the largest video-sharing platforms, providing a valuable opportunity to reach potential fans. Publishing your music video on your YouTube creator channel and other social media platforms is essential for promoting and expanding your audience. Artists often go on podcasts to promote an album or new video as well.

Want to watch examples of music videos created with AI? Check out the examples here on our YouTube channel: https://www.youtube.com/@neuralframes/videos